티스토리 뷰

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

import time

from bs4 import BeautifulSoup

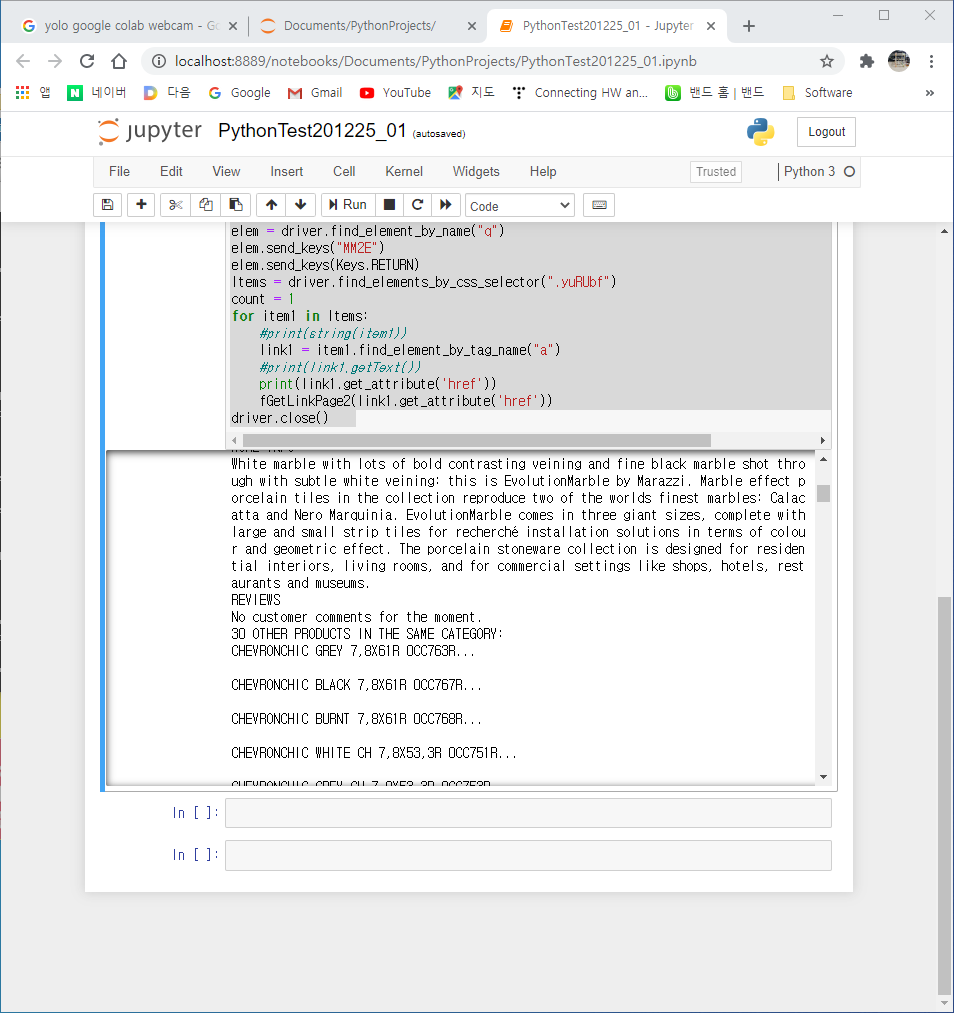

def fGetLinkPage(linkAddress):

driver = webdriver.Chrome()

driver.get(linkAddress)

try:

title1 = driver.find_element_by_tag_name('title')

print("[title]", title1.text)

except :

print("[Excepted]-TITLE")

try:

body1 = driver.find_element_by_tag_name('body')

print("[body]", body1.text)

except :

print("[Excepted-BODY]")

driver.close()

def fGetLinkPage2(linkAddress):

driver = webdriver.Chrome()

driver.get(linkAddress)

try:

print(driver.title)

except :

print("[Excepted]-TITLE")

try:

body1 = driver.find_element_by_tag_name('body')

print("[body]", body1.text)

except :

print("[Excepted-BODY]")

driver.close()

driver = webdriver.Chrome(executable_path=r'C:\Users\Bilient\Documents\PythonProjects\chromedriver.exe')

driver.get("https://www.google.com")

elem = driver.find_element_by_name("q")

elem.send_keys("MM2E")

elem.send_keys(Keys.RETURN)

Items = driver.find_elements_by_css_selector(".yuRUbf")

count = 1

for item1 in Items:

#print(string(item1))

link1 = item1.find_element_by_tag_name("a")

#print(link1.getText())

print(link1.get_attribute('href'))

fGetLinkPage2(link1.get_attribute('href'))

driver.close()

반응형

'SWDesk' 카테고리의 다른 글

| [Python] Connecting to Google Drive (2) | 2020.12.26 |

|---|---|

| [Python] Storing Webpage to PDF (2) | 2020.12.26 |

| 오늘 조사 자료 - 온톨로지(ontology) (0) | 2020.06.26 |

| IFTTT에서 서비스 등록하기 예제 (0) | 2020.05.13 |

| [TSDA] Class Description - cTimeProperty (0) | 2020.05.01 |

반응형

250x250

최근에 올라온 글

최근에 달린 댓글

- Total

- Today

- Yesterday

링크

TAG

- Decorator

- BSC

- BiliChild

- 혁신과허들

- Video

- 전압

- 둎

- 빌리언트

- 심심풀이

- Innovations

- 허들

- 심심풀이치매방지기

- 치매

- 절연형

- 오블완

- DYOV

- ServantClock

- 빌리칠드

- 배프

- 혁신

- Hurdles

- 아두이노

- arduino

- bilient

- 티스토리챌린지

- 전류

- Innovations&Hurdles

- image

- Innovation&Hurdles

- 치매방지

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

글 보관함